Table of Contents

FreeBSD 4BSD vs ULE Scheduler

In late 2011, there was an extremely long thread SCHED_ULE should not be the default spread between the FreeBSD-stable and FreeBSD-current mailing lists. That thread contains lots of claims and counter-claims but very little hard evidence to back up the claims. This page attempts to provide some real numbers.

Summary

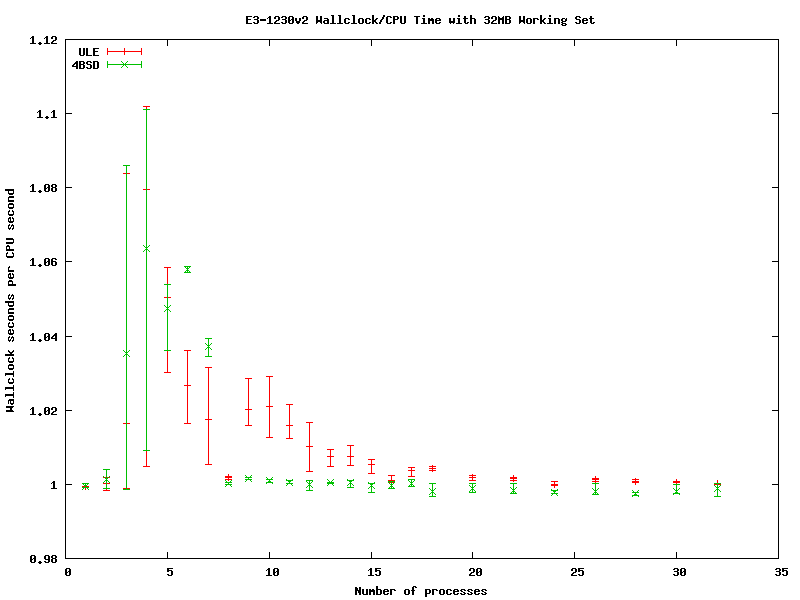

With r227746 on a SPARC, the better scheduler depends on both the number of processes and the working set size, and whether you want to minimise total CPU time or wallclock time. With r251496 on an E3 Xeon, it doesn't matter - they are virtually identical.

Details

The following represents the results of a synthetic benchmark run on:

- A 16-core SunFire V890 server 1) running FreeBSD 10-current2) - 4BSD dmesg, ULE dmesg. This is a server-grade NUMA SPARC system so the results aren't necessarily comparable with a multicore x86 desktop system. Note that this system is no longer available so additional tests cannot be run.

- An Intel Xeon E3-1230v3 (3.3GHz quad-core with hyperthreading) CPU in a Supermicro X9SCA-F motherboard with 32GB DDR3-1600 RAM, running FreeBSD 10-current/amd64 r251496.

The benchmark runs multiple copies (processes) of a core that just repeatedly cycles through an array of doubles (to provide a pre-defined working set size), incrementing them. The source code can be found at loop.c. The tests were run in single-user mode using both the 4BSD and ULE schedulers. In all cases, the test was run 5 times with a varying number of processes and working-set sizes of 1KiB, 4MiB and 32MiB. For the V890, 1, 2, 4, 6, 8, 10, 12, 14, 15, 16, 17, 18, 20, 24, 28, 31, 32, 33, 36, 40, 48, 56 and 64 processes were used. For the Xeon, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 20, 22, 24, 26, 28, 30 and 32 processes were used.

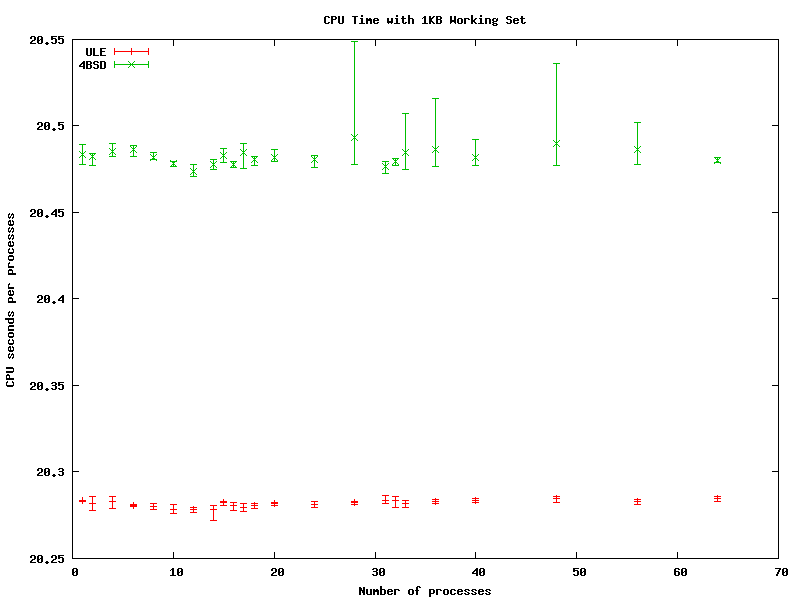

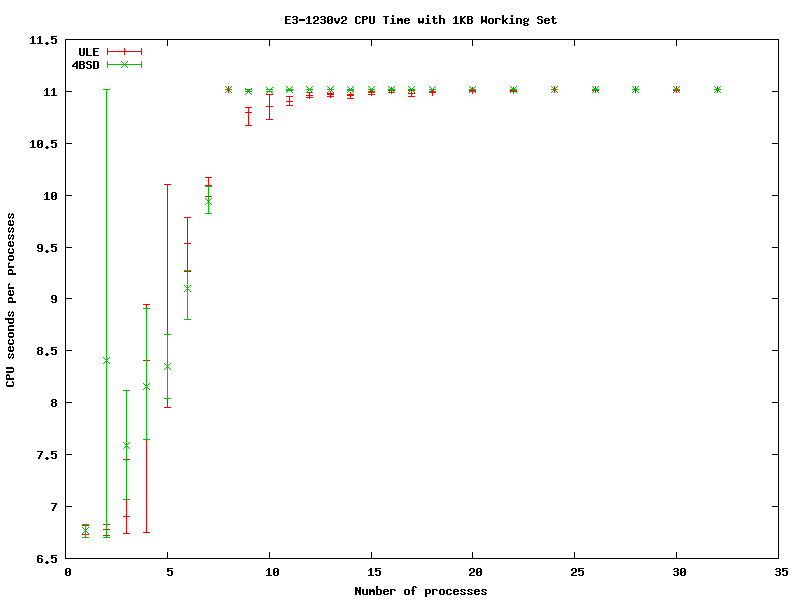

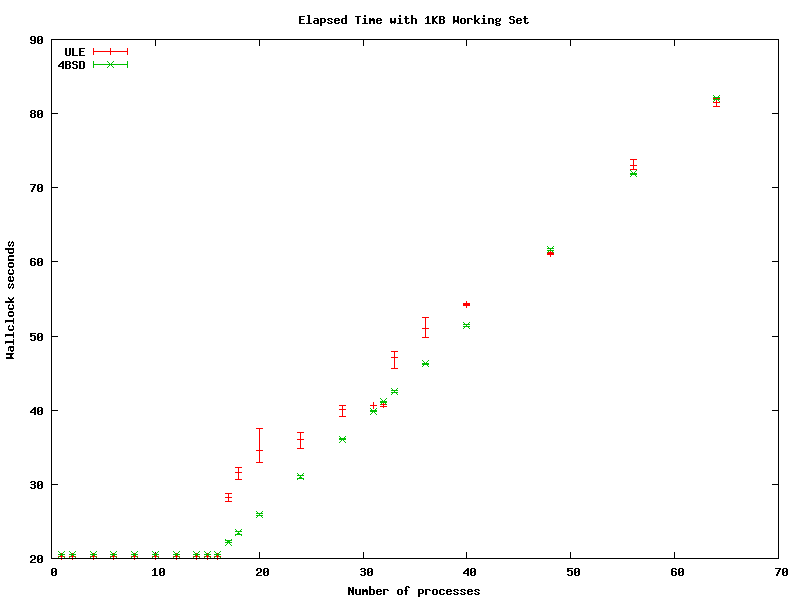

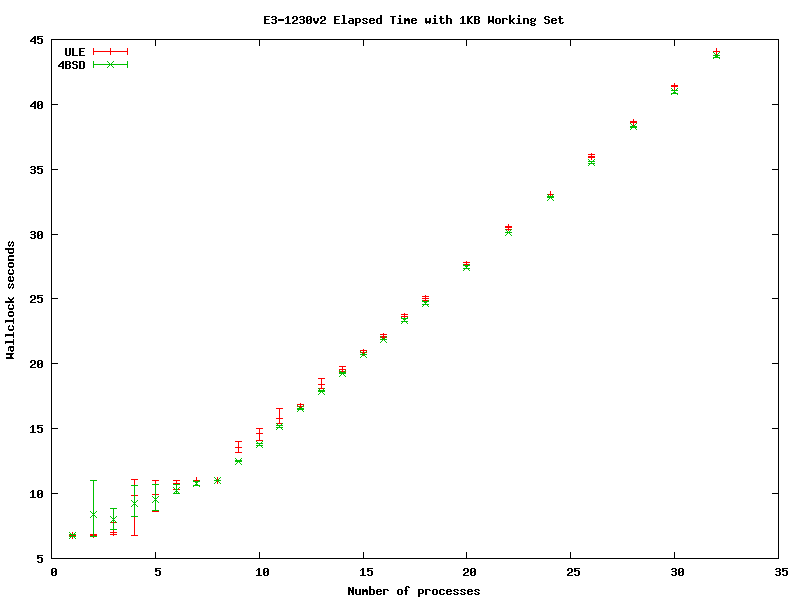

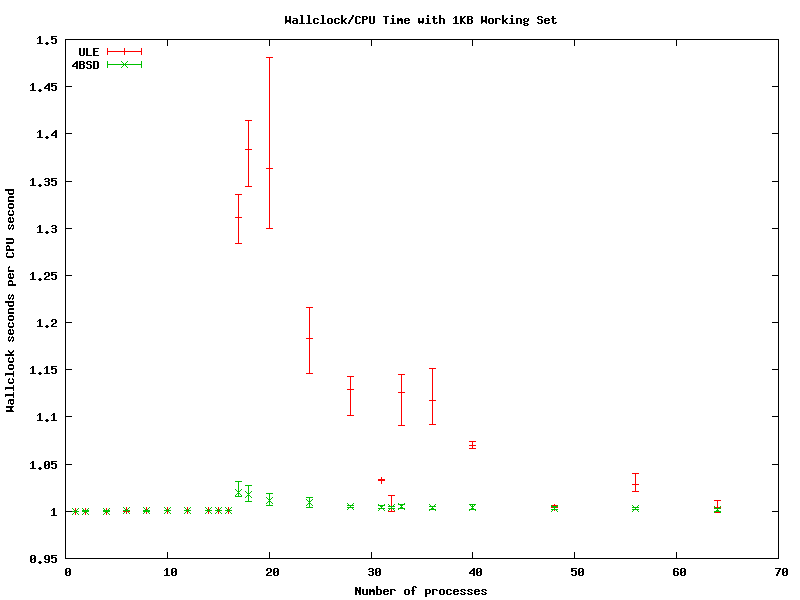

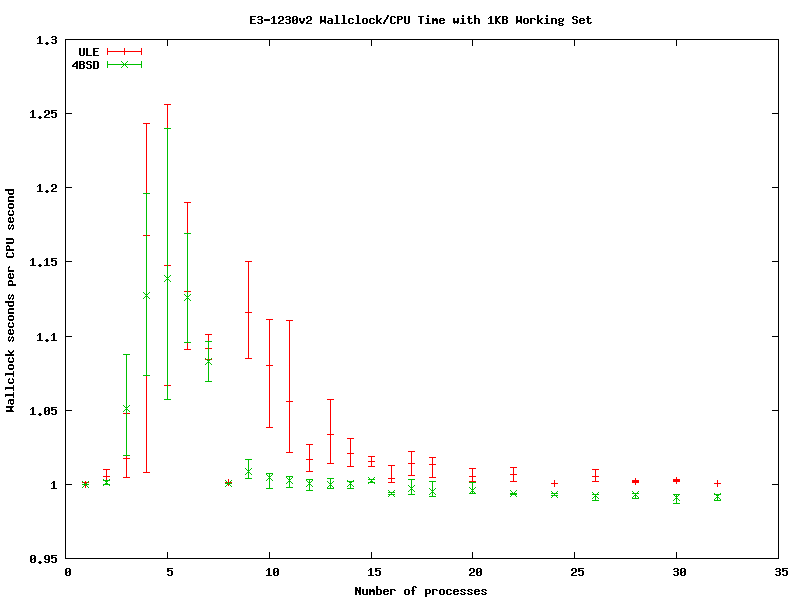

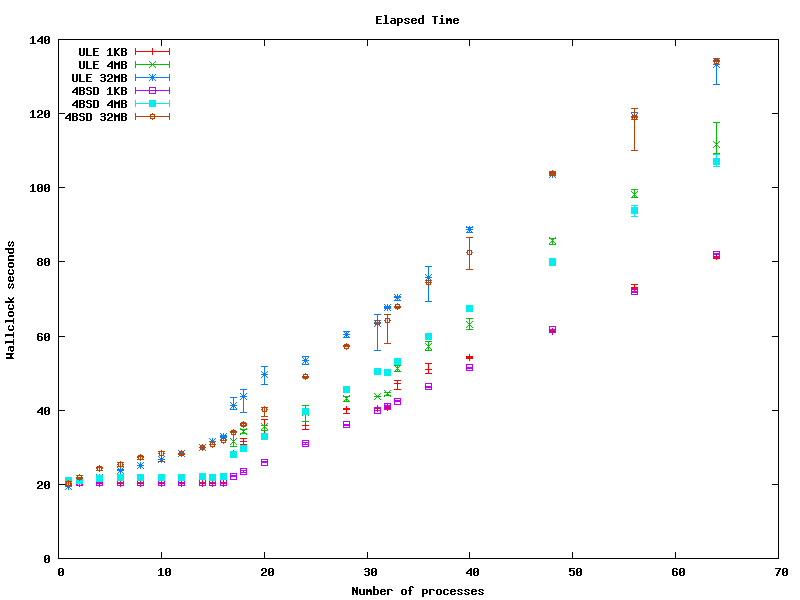

1KiB Working Set

This is the case where everything fits into L1 cache 1.6e9 iterations were used on the V890 and 5e9 iterations were used on the Xeon.

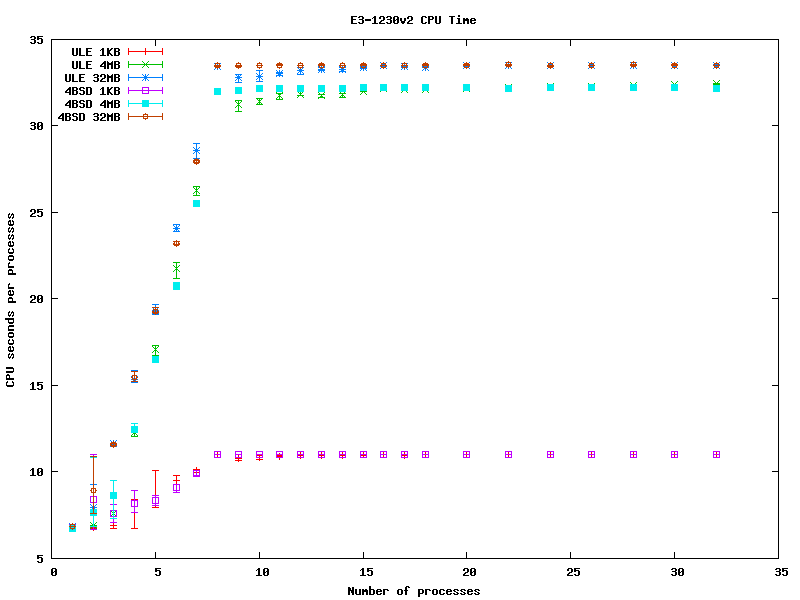

shows that ULE is very slightly more efficient than 4BSD and (pleasingly) that the amount of CPU time taken to perform a task is independent of the number of active processes for either scheduler.

is far less clean and shows the impact of hyperthreading, rather than real cores, with CPU time for >10 processes stabilising at nearly twice the CPU time for a single process. The reason for the wide distribution of times for 2-6 processes is unclear but, at least for the 4BSD scheduler, is probably due incorrect allocation of processes to hardware threads.

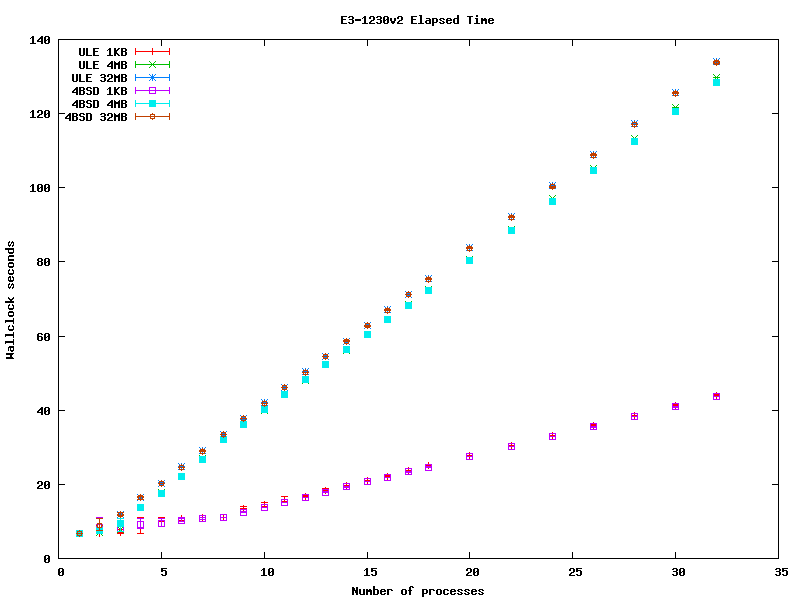

shows that both schedulers are well-behaved until there are more processes than cores. Once there are more processes than cores, 4BSD remains well behaved, whilst ULE has significant jumps in wallclock time - ie the same set of tasks take longer to run with ULE. The scheduler efficiency graph below shows this more clearly.

Again, between 2 and 7 processes, the Xeon is not well-behaved.

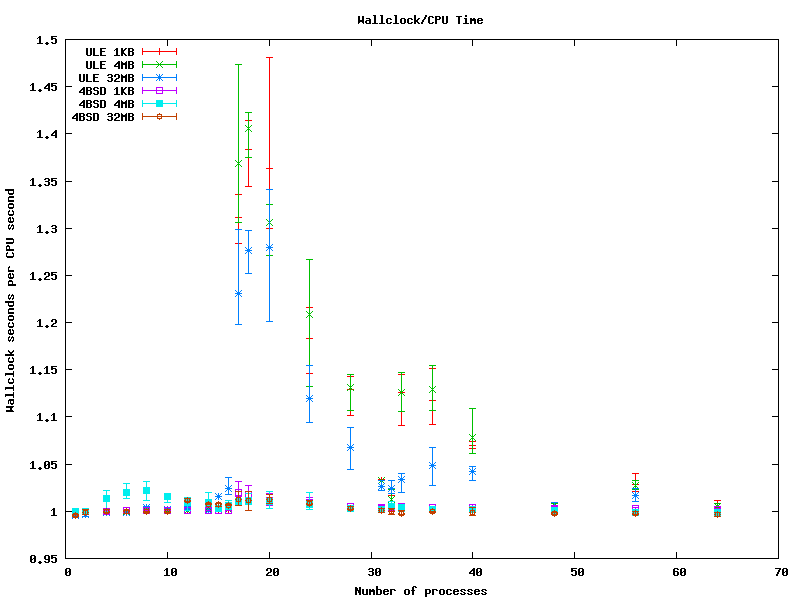

This graph shows scheduler efficiency as a ratio of wallclock time to CPU time. A perfect scheduler would have a ratio of 1 and both schedulers do a good job up to 16 processes. Beyond this, 4BSD has a small jump whilst ULE has a significant jump - forming roughly a sawtooth that peaks at about 18 processes and slopes back to 1 at 32 processes before jumping up again and sloping back to 1 at 48 processes.

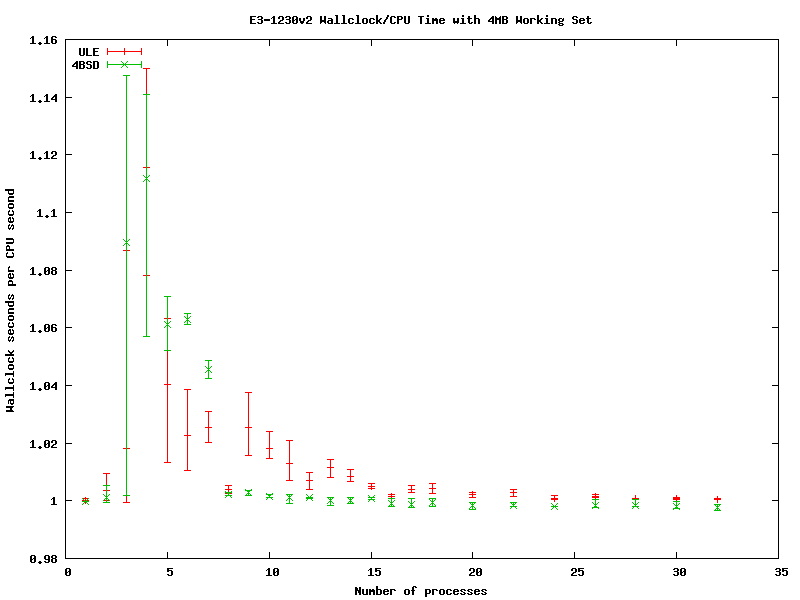

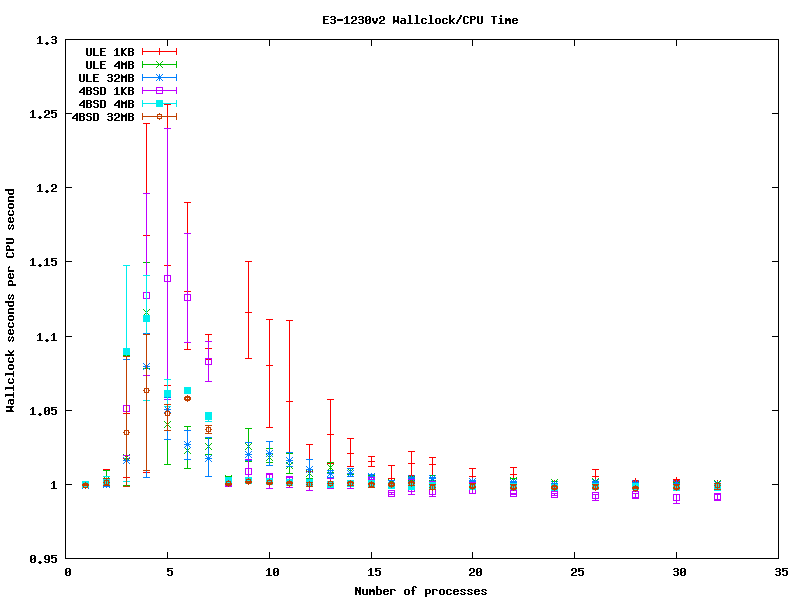

As with the V890 results, there is a sawtooth pattern with a number-of-threads periodicity, though it is far less pronounced than the V890. On the downside, neither scheduler does a good job between 2 and 7 processes.

Both these results suggest that ULE does not do a good job of timesharing where there are more CPU-bound processes than cores - instead it preferentially schedules already running processes.

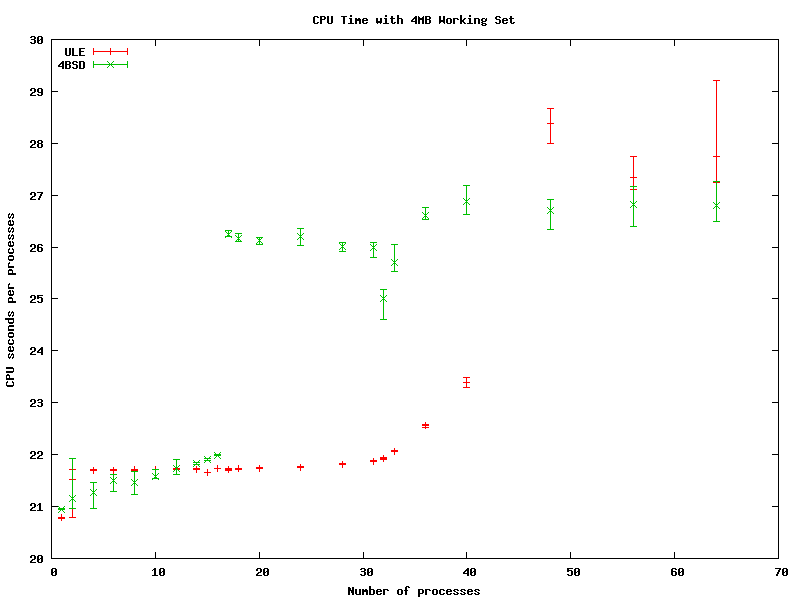

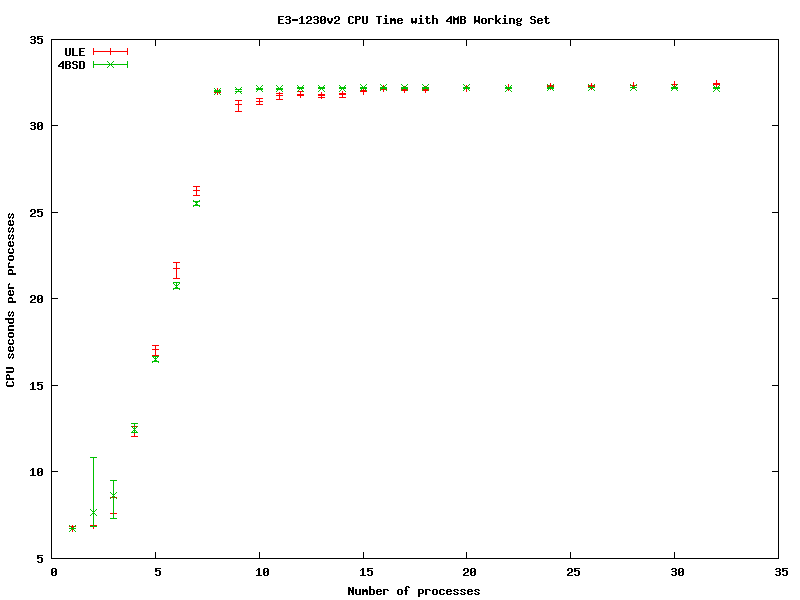

4MiB Working Set

This is the case where a couple of processes fit into L2 cache and 1.2e9 iterations were used.

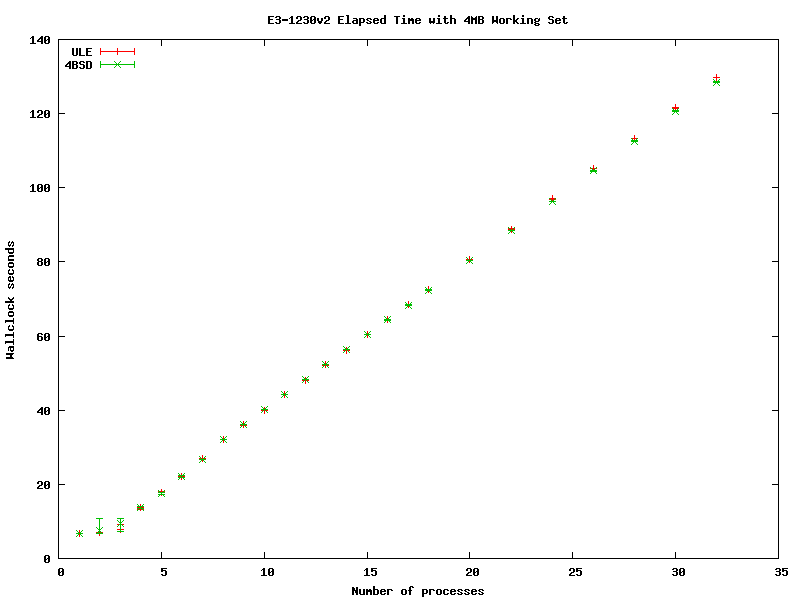

shows that both schedulers behave similarly for less than 4 processes and between 10 and 16 processes. Between 4 and 10 processes, 4BSD uses slightly less CPU than ULE. Between 17 and about 40 proceses, ULE uses significantly less CPU than ULE. Beyond about 48 processes, 4BSD again takes the lead.

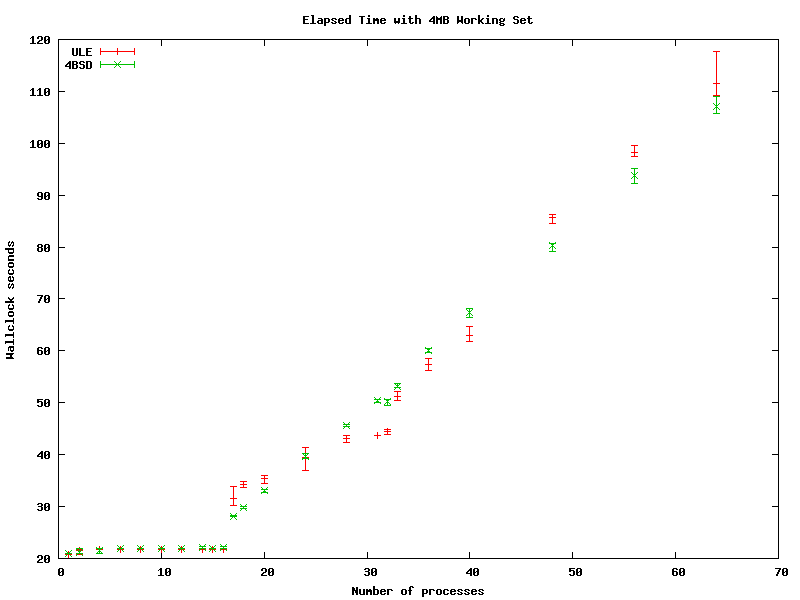

shows that both schedulers are well-behaved until there are more processes than cores. Once there are more processes than cores, 4BSD remains well behaved, whilst ULE has significant variations away from the diagonal slope - though unlike the 1KiB case, sometimes ULE does better than 4BSD.

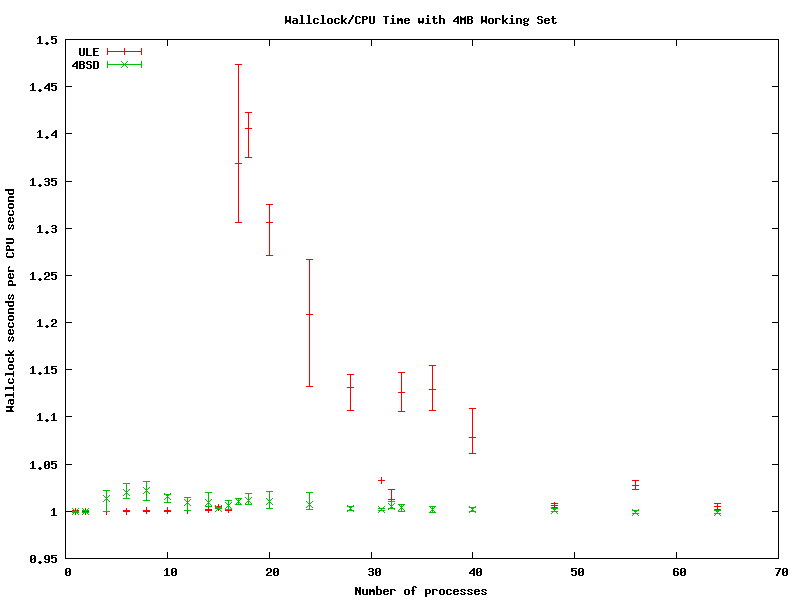

The 4BSD scheduler maintains a fairly constant effeciency, with only a slight bump between 4 and 12 processes. OTOH, ULE provides a constant efficiency between 1 and 16 processes but then shows the same sawtooth pattern that was seen with 1KiB processes.

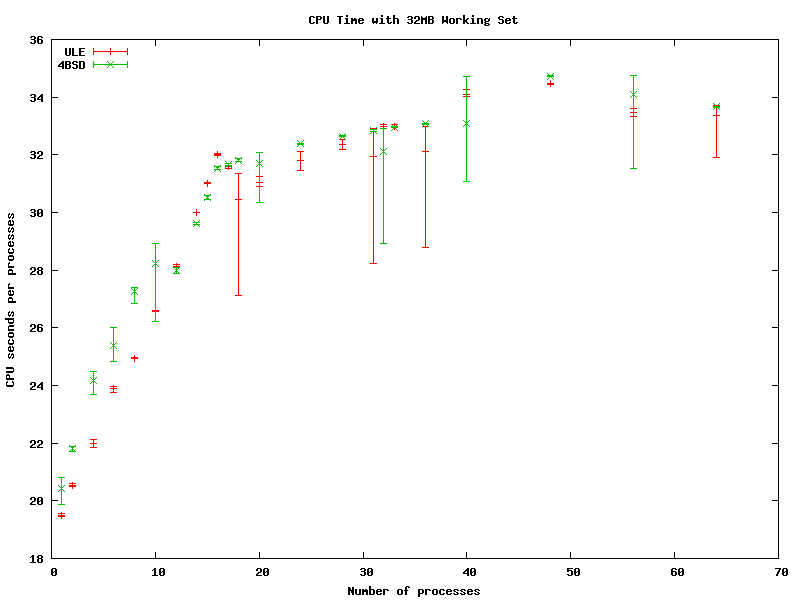

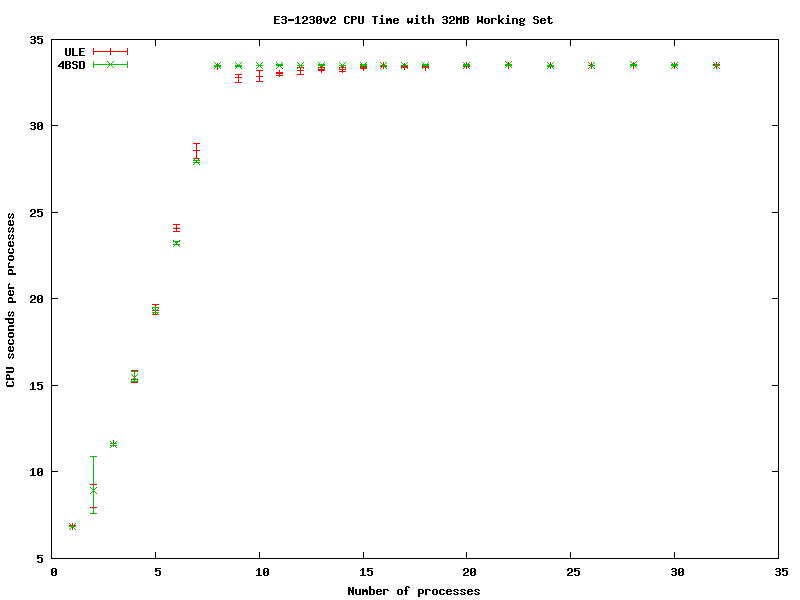

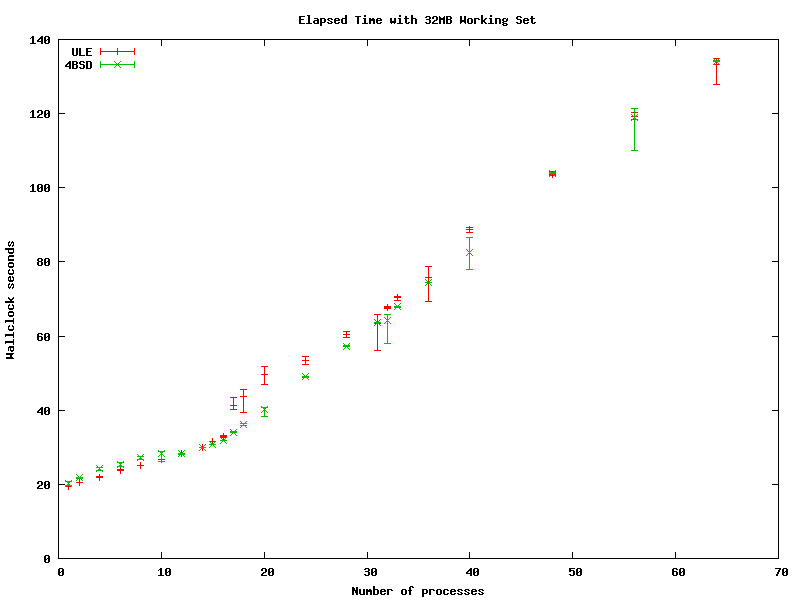

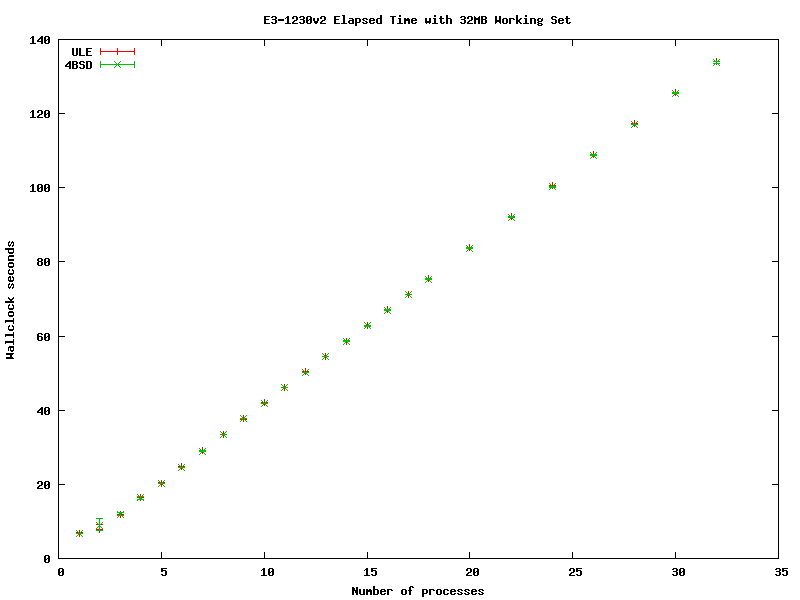

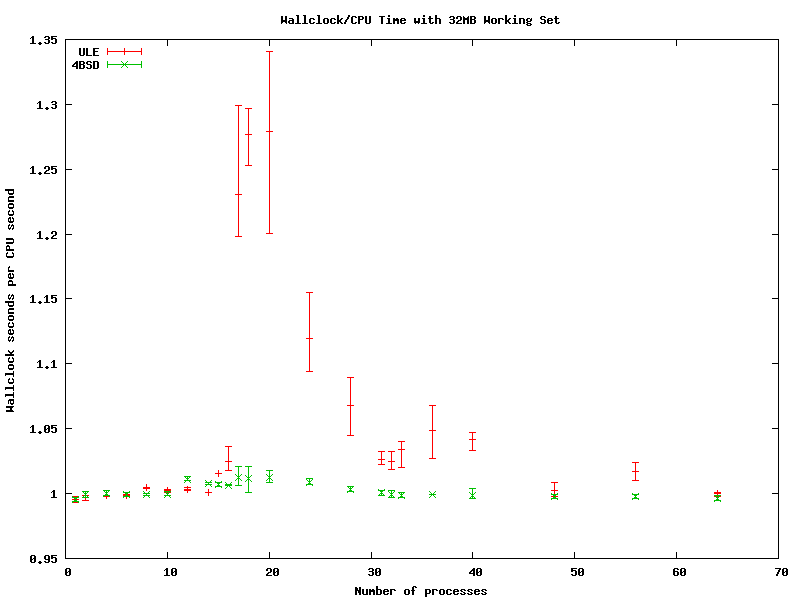

32MiB Working Set

This is the case where every processs is cache busting 5e8 iterations were used.

shows that ULE generally uses less CPU time, the exception being between 12 and 16 processes. On the downside the overall efficiency takes a significant hit, with a roughly 60% overhead for more than 16 processes.

shows that both schedulers behave similarly except between 16 and 30 processes where ULE can run the tasks quicker.

The 4BSD scheduler maintains a fairly constant effeciency, with a slight bump between about 16 and 32 processes. OTOH, ULE provides a constant efficiency between 1 and 14 processes but then shows the same sawtooth pattern that was seen with 1KiB and 4MiB processes.